Table of Contents

This chapter covers MySQL InnoDB cluster, which combines MySQL technologies to enable you to create highly available clusters of MySQL server instances.

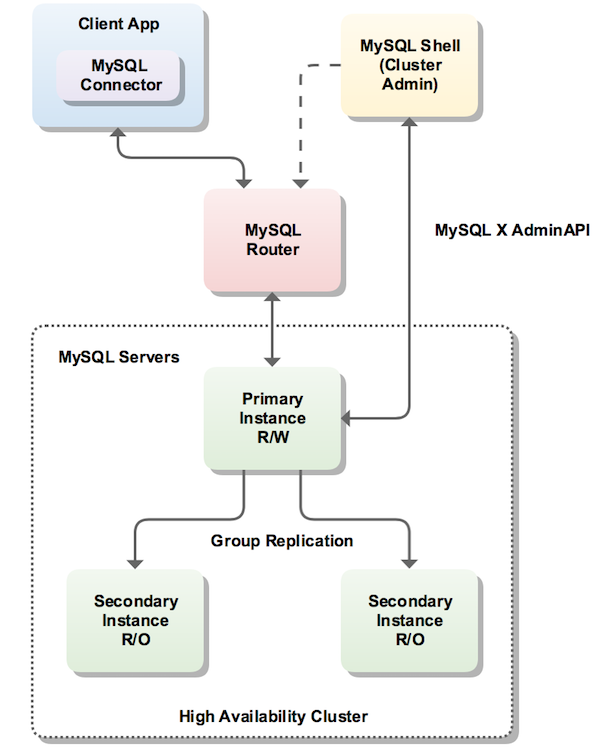

MySQL InnoDB cluster provides a complete high availability solution for MySQL. MySQL Shell includes AdminAPI which enables you to easily configure and administer a group of at least three MySQL server instances to function as an InnoDB cluster. Each MySQL server instance runs MySQL Group Replication, which provides the mechanism to replicate data within InnoDB clusters, with built-in failover. AdminAPI removes the need to work directly with Group Replication in InnoDB clusters, but for more information see Chapter 18, Group Replication which explains the details. MySQL Router can automatically configure itself based on the cluster you deploy, connecting client applications transparently to the server instances. In the event of an unexpected failure of a server instance the cluster reconfigures automatically. In the default single-primary mode, an InnoDB cluster has a single read-write server instance - the primary. Multiple secondary server instances are replicas of the primary. If the primary fails, a secondary is automatically promoted to the role of primary. MySQL Router detects this and forwards client applications to the new primary. Advanced users can also configure a cluster to have multiple-primaries.

InnoDB cluster does not provide support for MySQL NDB Cluster.

NDB Cluster depends on the NDB storage

engine as well as a number of programs specific to NDB Cluster which

are not furnished with MySQL Server 8.0;

NDB is available only as part of the MySQL

NDB Cluster distribution. In addition, the MySQL server binary

(mysqld) that is supplied with MySQL Server

8.0 cannot be used with NDB Cluster. For more

information about MySQL NDB Cluster, see

Chapter 22, MySQL NDB Cluster 8.0.

Section 22.1.6, “MySQL Server Using InnoDB Compared with NDB Cluster”, provides information

about the differences between the InnoDB and

NDB storage engines.

The following diagram shows an overview of how these technologies work together:

MySQL Shell includes the AdminAPI, which is accessed

through the dba global variable and its

associated methods. The dba variable's

methods enable you to deploy, configure, and administer InnoDB

clusters. For example, use the

dba.createCluster() method to create an

InnoDB cluster.

MySQL Shell enables you to connect to servers over a socket connection, but AdminAPI requires TCP connections to a server instance. Socket based connections are not supported in AdminAPI.

MySQL Shell provides online help for the AdminAPI. To

list all available dba commands, use the

dba.help() method. For online help on a

specific method, use the general format

object.help('methodname'). For example:

mysql-js> dba.help('getCluster')

Retrieves a cluster from the Metadata Store.

SYNTAX

dba.getCluster([name][, options])

WHERE

name: Parameter to specify the name of the cluster to be returned.

options: Dictionary with additional options.

RETURNS

The cluster object identified by the given name or the default cluster.

DESCRIPTION

If name is not specified or is null, the default cluster will be returned.

If name is specified, and no cluster with the indicated name is found, an error

will be raised.

The options dictionary accepts the connectToPrimary option,which defaults to

true and indicates the shell to automatically connect to the primary member of

the cluster.

EXCEPTIONS

MetadataError in the following scenarios:

- If the Metadata is inaccessible.

- If the Metadata update operation failed.

ArgumentError in the following scenarios:

- If the Cluster name is empty.

- If the Cluster name is invalid.

- If the Cluster does not exist.

RuntimeError in the following scenarios:

- If the current connection cannot be used for Group Replication.

MySQL Shell can optionally log the SQL statements used by AdminAPI operations (with the exception of sandbox operations), and can also display them in the terminal as they are executed. To configure MySQL Shell to do this, see Logging AdminAPI Operations.

One of the core concepts of administering InnoDB cluster is understanding connections to the MySQL instances which make up your cluster. The requirements for connections to the instances when administering your InnoDB cluster, and for the connections between the instances themselves, are:

only TCP/IP connections are supported, using Unix sockets or named pipes is not supported. InnoDB cluster is intended to be used in a local area network, running a cluster of instances connected over a wide area network is not recommended.

only MySQL Classic protocol connections are supported, X Protocol is not supported.

TipYour applications can use X Protocol, this requirement is for AdminAPI.

MySQL Shell enables you to work with various APIs, and supports

specifying connections as explained in

Section 4.2.5, “Connecting to the Server Using URI-Like Strings or Key-Value Pairs”. The

Additional Connection parameters are not

supported by InnoDB cluster. You can specify connections using

either URI-type strings, or key-value pairs. This documentation

demonstrates AdminAPI using URI-type connection strings.

For example, to connect as the user

myuser to the MySQL server instance

at www.example.com, on port

3306 use the connection string:

myuser@www.example.com:3306

To use this connection string with an AdminAPI operation

such as dba.configureInstance(), you need to

ensure the connection string is interpreted as a string, for

example by surrounding the connection string with either single

(') or double (") quote marks. If you are using the JavaScript

implementation of AdminAPI issue:

MySQL JS > dba.configureInstance('myuser@www.example.com:3306')Assuming you are running MySQL Shell in the default interactive mode, you are prompted for your password. AdminAPI supports MySQL Shell's Pluggable Password Store, and once you store the password you used to connect to the instance you are no longer prompted for it.

This section explains the different ways you can create an InnoDB cluster, the requirements for server instances and the software you need to install to deploy a cluster.

InnoDB cluster supports the following deployment scenarios:

Production deployment: if you want to use InnoDB cluster in a full production environment you need to configure the required number of machines and then deploy your server instances to the machines. A production deployment enables you to exploit the high availability features of InnoDB cluster to their full potential. See Section 21.2.4, “Production Deployment of InnoDB Cluster” for instructions.

Sandbox deployment: if you want to test out InnoDB cluster before committing to a full production deployment, the provided sandbox feature enables you to quickly set up a cluster on your local machine. Sandbox server instances are created with the required configuration and you can experiment with InnoDB cluster to become familiar with the technologies employed. See Section 21.2.6, “Sandbox Deployment of InnoDB Cluster” for instructions.

ImportantA sandbox deployment is not suitable for use in a full production environment.

Before installing a production deployment of InnoDB cluster, ensure that the server instances you intend to use meet the following requirements.

InnoDB cluster uses Group Replication and therefore your server instances must meet the same requirements. See Section 18.9.1, “Group Replication Requirements”. AdminAPI provides the

dba.checkInstanceConfiguration()method to verify that an instance meets the Group Replication requirements, and thedba.configureInstance()method to configure an instance to meet the requirements.NoteWhen using a sandbox deployment the instances are configured to meet these requirements automatically.

Group Replication members can contain tables using a storage engine other than

InnoDB, for exampleMyISAM. Such tables cannot be written to by Group Replication, and therefore when using InnoDB cluster. To be able to write to such tables with InnoDB cluster, convert all such tables toInnoDBbefore using the instance in a InnoDB cluster.The Performance Schema must be enabled on any instance which you want to use with InnoDB cluster.

The provisioning scripts that MySQL Shell uses to configure servers for use in InnoDB cluster require access to Python version 2.7. For a sandbox deployment Python is required on the single machine used for the deployment, production deployments require Python on each server instance which should run MySQL Shell locally, see Persisting Settings.

On Windows MySQL Shell includes Python and no user configuration is required. On Unix Python must be found as part of the shell environment. To check that your system has Python configured correctly issue:

$

/usr/bin/env pythonIf a Python interpreter starts, no further action is required. If the previous command fails, create a soft link between

/usr/bin/pythonand your chosen Python binary.From version 8.0.17, instances must use a unique

server_idwithin a InnoDB cluster. When you use theCluster.addInstance(instance)server_idofinstanceis already used by an instance in the cluster then the operation fails with an error.

The method you use to install InnoDB cluster depends on the type of deployment you intend to use. For a sandbox deployment install the components of InnoDB cluster to a single machine. A sandbox deployment is local to a single machine, therefore the install needs to only be done once on the local machine. For a production deployment install the components to each machine that you intend to add to your cluster. A production deployment uses multiple remote host machines running MySQL server instances, so you need to connect to each machine using a tool such as SSH or Windows remote desktop to carry out tasks such as installing components. The following methods of installing InnoDB cluster are available:

Downloading and installing the components using the following documentation:

MySQL Server - see Chapter 2, Installing and Upgrading MySQL.

MySQL Shell - see Installing MySQL Shell.

MySQL Router - see Installing MySQL Router.

On Windows you can use the MySQL Installer for Windows for a sandbox deployment. For details, see Section 2.3.3.3.1.1, “High Availability”.

Once you have installed the software required by InnoDB cluster choose to follow either Section 21.2.6, “Sandbox Deployment of InnoDB Cluster” or Section 21.2.4, “Production Deployment of InnoDB Cluster”.

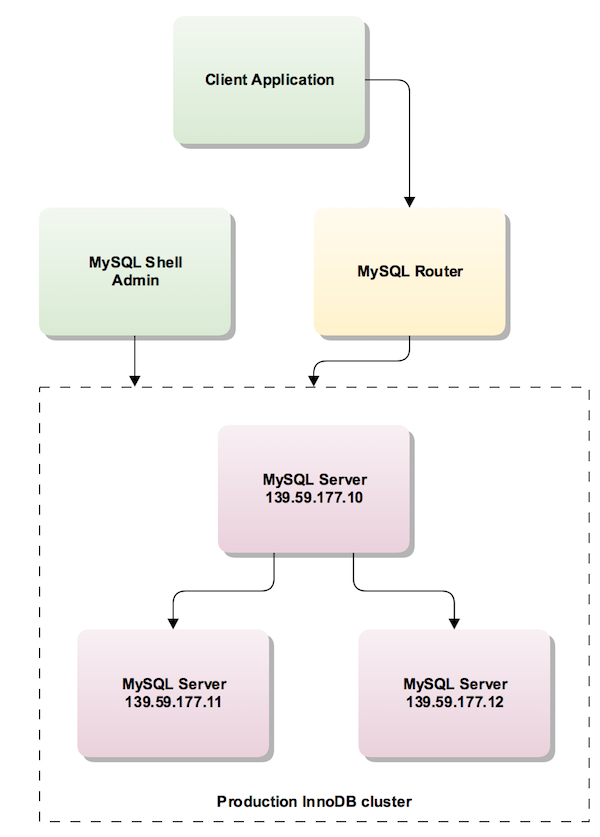

When working in a production environment, the MySQL server instances which make up an InnoDB cluster run on multiple host machines as part of a network rather than on single machine as described in Section 21.2.6, “Sandbox Deployment of InnoDB Cluster”. Before proceeding with these instructions you must install the required software to each machine that you intend to add as a server instance to your cluster, see Section 21.2.3, “Methods of Installing”.

The following diagram illustrates the scenario you work with in this section:

Unlike a sandbox deployment, where all instances are deployed locally to one machine which AdminAPI has local file access to and can persist configuration changes, for a production deployment you must persist any configuration changes on the instance. How you do this depends on the version of MySQL running on the instance, see Persisting Settings.

To pass a server's connection information to AdminAPI, use URI-like connection strings or a data dictionary; see Section 4.2.5, “Connecting to the Server Using URI-Like Strings or Key-Value Pairs”. In this documentation, URI-like strings are shown.

The following sections describe how to deploy a production InnoDB cluster.

The user account used to administer an instance does not have

to be the root account, however the user needs to be assigned

full read and write privileges on the InnoDB cluster

metadata tables in addition to full MySQL administrator

privileges (SUPER, GRANT

OPTION, CREATE,

DROP and so on). The preferred method to

create users to administer the cluster is using the

clusterAdmin option with the

dba.configureInstance(), and

Cluster.addInstance()ic

is shown in examples.

The clusterAdmin user name and password

must be the same on all instances that belong to a cluster.

If only read operations are needed (such as for monitoring purposes), an account with more restricted privileges can be used. See Configuring Users for InnoDB Cluster.

As part of using Group Replication, InnoDB cluster creates

internal users which enable replication between the servers in

the cluster. These users are internal to the cluster, and the

user name of the generated users follows a naming scheme of

mysql_innodb_cluster_r[.

The hostname used for the internal users depends on whether

the 10_numbers]ipWhitelist option has been configured.

If ipWhitelist is not configured, it

defaults to AUTOMATIC and the internal

users are created using both the wildcard %

character and localhost for the hostname

value. When ipWhitelist has been

configured, for each address in the

ipWhitelist list an internal user is

created.

For more information, see

Creating a Whitelist of Servers.

Each internal user has a randomly generated password. The randomly generated users are given the following grants:

GRANT REPLICATION SLAVE ON *.* to internal_user;The internal user accounts are created on the seed instance and then replicated to the other instances in the cluster. The internal users are:

generated when creating a new cluster by issuing

dba.createCluster()generated when adding a new instance to the cluster by issuing

Cluster.addInstance()

In addition, the

Cluster.rejoinInstance()ipWhitelist option is

used to specify a hostname. For example by issuing:

Cluster.rejoinInstance({ipWhitelist: "192.168.1.1/22"});

all previously existing internal users are removed and a new

internal user is created, taking into account the

ipWhitelist value used.

For more information on the internal users required by Group Replication, see Section 18.2.1.3, “User Credentials”.

The production instances which make up a cluster run on separate machines, therefore each machine must have a unique host name and be able to resolve the host names of the other machines which run server instances in the cluster. If this is not the case, you can:

configure each machine to map the IP of each other machine to a hostname. See your operating system documentation for details. This is the recommended solution.

set up a DNS service

configure the

report_hostvariable in the MySQL configuration of each instance to a suitable externally reachable address

InnoDB cluster supports using IP addresses instead of host

names. From MySQL Shell 8.0.18, AdminAPI supports

IPv6 addresses if the target MySQL Server version is higher

than 8.0.13. When using MySQL Shell 8.0.18 or higher, if all

cluster instances are running 8.0.14 or higher then you can

use an IPv6 or hostname that resolves to an IPv6 address for

instance connection strings and with options such as

localAddress, groupSeeds

and ipWhitelist. For more information on

using IPv6 see Section 18.4.5, “Support For IPv6 And For Mixed IPv6 And IPv4 Groups”.

Previous versions support IPv4 addresses only.

In this procedure the host name

ic- is

used in examples.

number

To verify whether the hostname of a MySQL server is correctly configured, execute the following query to see how the instance reports its own address to other servers and try to connect to that MySQL server from other hosts using the returned address:

SELECT coalesce(@@report_host, @@hostname);

Instances that belong to a cluster have a

localAddress, which is the

group_replication_local_address,

and this address is used for internal connections between the

instances in the cluster and is not for use by clients. When

you create a cluster or add instances to a cluster, by default

the localAddress port is calculated by

multiplying the target instance's

port value by 10 and then

adding one to the result. For example, when the

port of the target instance

is the default value of 3306, the calculated

localAddress port is 33061. You should

ensure that port numbers used by your cluster instances are

compatible with the way localAddress is

calculated. For example, if the server instance being used to

create a cluster has a port

number higher than 6553, the

dba.createCluster() operation fails because

the calculated localAddress port number

exceeds the maximum valid port which is 65535. To avoid this

situation either use a lower

port value on the instances

you use for InnoDB cluster, or manually assign the

localAddress value, for example:

mysql-js> dba.createCluster('testCluster', {'localAddress':'icadmin@ic-1:33061'}If your instances are using SELinux, you need to ensure that the ports used by InnoDB cluster are open so that the instances can communicate with each other. See How do I use Group Replication with SELinux?.

The AdminAPI commands you use to work with a cluster

and its server instances modify the configuration of the

instances. Depending on the way MySQL Shell is connected to

an instance and the version of MySQL installed on the

instance, these configuration changes can be persisted to the

instance automatically. Persisting settings to the instance

ensures that configuration changes are retained after the

instance restarts, for background information see

SET

PERSIST. This is essential for reliable cluster

usage, for example if settings are not persisted then an

instance which has been added to a cluster does not rejoin the

cluster after a restart because configuration changes are

lost. Persisting changes is required after the following

operations:

dba.configureInstance()dba.createCluster()Cluster.addInstance()Cluster.removeInstance()Cluster.rejoinInstance()

Instances which meet the following requirements support persisting configuration changes automatically:

the instance is running MySQL version 8.0.11 or later

persisted_globals_loadis set toONthe instance has not been started with the

--no-defaultsoption

Instances which do not meet these requirements do not support persisting configuration changes automatically, when AdminAPI operations result in changes to the instance's settings to be persisted you receive warnings such as:

WARNING: On instance 'localhost:3320' membership change cannot be persisted since MySQL version 5.7.21 does not support the SET PERSIST command (MySQL version >= 8.0.5 required). Please use the <Dba>.configureLocalInstance command locally to persist the changes.

When AdminAPI commands are issued against the MySQL

instance which MySQL Shell is currently running on, in other

words the local instance, MySQL Shell persists configuration

changes directly to the instance. On local instances which

support persisting configuration changes automatically,

configuration changes are persisted to the instance's

mysqld-auto.cnf file and the

configuration change does not require any further steps. On

local instances which do not support persisting configuration

changes automatically, you need to make the changes locally,

see Configuring Instances with

dba.configureLocalInstance().

When run against a remote instance, in other words an instance

other than the one which MySQL Shell is currently running on,

if the instance supports persisting configuration changes

automatically, the AdminAPI commands persist

configuration changes to the instance's

mysql-auto.conf option file. If a remote

instance does not support persisting configuration changes

automatically, the AdminAPI commands can not

automatically configure the instance's option file. This means

that AdminAPI commands can read information from the

instance, for example to display the current configuration,

but changes to the configuration cannot be persisted to the

instance's option file. In this case, you need to persist the

changes locally, see

Configuring Instances with

dba.configureLocalInstance().

When working with a production deployment it can be useful to

configure verbose logging for MySQL Shell, the information in

the log can help you to find and resolve any issues that might

occur when you are preparing server instances to work as part

of InnoDB cluster. To start MySQL Shell with a verbose

logging level use the

--log-level option:

shell> mysqlsh --log-level=DEBUG3

The DEBUG3 is recommended, see

--log-level for more

information. When DEBUG3 is set the

MySQL Shell log file contains lines such as Debug:

execute_sql( ... ) which contain the SQL queries

that are executed as part of each AdminAPI call. The

log file generated by MySQL Shell is located in

~/.mysqlsh/mysqlsh.log for Unix-based

systems; on Microsoft Windows systems it is located in

%APPDATA%\MySQL\mysqlsh\mysqlsh.log. See

MySQL Shell Logging and Debug for more

information.

In addition to enabling the MySQL Shell log level, you can configure the amount of output AdminAPI provides in MySQL Shell after issuing each command. To enable the amount of AdminAPI output, in MySQL Shell issue:

mysql-js> dba.verbose=2

This enables the maximum output from AdminAPI calls. The available levels of output are:

0 or OFF is the default. This provides minimal output and is the recommended level when not troubleshooting.

1 or ON adds verbose output from each call to the AdminAPI.

2 adds debug output to the verbose output providing full information about what each call to AdminAPI executes.

AdminAPI provides the

dba.configureInstance() function that

checks if an instance is suitably configured for

InnoDB cluster usage, and configures the instance if it

finds any settings which are not compatible with

InnoDB cluster. You run the

dba.configureInstance() command against an

instance and it checks all of the settings required to enable

the instance to be used for InnoDB cluster usage. If the

instance does not require configuration changes, there is no

need to modify the configuration of the instance, and the

dba.configureInstance() command output

confirms that the instance is ready for InnoDB cluster

usage. If any changes are required to make the instance

compatible with InnoDB cluster, a report of the incompatible

settings is displayed, and you can choose to let the command

make the changes to the instance's option file. Depending on

the way MySQL Shell is connected to the instance, and the

version of MySQL running on the instance, you can make these

changes permanent by persisting them to a remote instance's

option file, see

Persisting Settings.

Instances which do not support persisting configuration

changes automatically require that you configure the instance

locally, see Configuring Instances with

dba.configureLocalInstance().

Alternatively you can make the changes to the instance's

option file manually, see Section 4.2.2.2, “Using Option Files” for

more information. Regardless of the way you make the

configuration changes, you might have to restart MySQL to

ensure the configuration changes are detected.

The syntax of the dba.configureInstance()

command is:

dba.configureInstance([instance][,options])

where instance is an instance

definition, and options is a data

dictionary with additional options to configure the operation.

The command returns a descriptive text message about the

operation's result.

The instance definition is the

connection data for the instance, see

Section 4.2.5, “Connecting to the Server Using URI-Like Strings or Key-Value Pairs”. If

the target instance already belongs to an InnoDB cluster an

error is generated and the process fails.

The options dictionary can contain the following:

mycnfPath- the path to the MySQL option file of the instance.outputMycnfPath- alternative output path to write the MySQL option file of the instance.password- the password to be used by the connection.clusterAdmin- the name of an InnoDB cluster administrator user to be created. The supported format is the standard MySQL account name format. Supports identifiers or strings for the user name and host name. By default if unquoted it assumes input is a string.clusterAdminPassword- the password for the InnoDB cluster administrator account being created usingclusterAdmin. Although you can specify using this option, this is a potential security risk. If you do not specify this option, but do specify theclusterAdminoption, you are prompted for the password at the interactive prompt.clearReadOnly- a boolean value used to confirm thatsuper_read_onlyshould be set to off, see Super Read-only and Instances.interactive- a boolean value used to disable the interactive wizards in the command execution, so that prompts are not provided to the user and confirmation prompts are not shown.restart- a boolean value used to indicate that a remote restart of the target instance should be performed to finalize the operation.

Although the connection password can be contained in the instance definition, this is insecure and not recommended. Use the MySQL Shell Pluggable Password Store to store instace passwords securely.

Once dba.configureInstance() is issued

against an instance, the command checks if the instance's

settings are suitable for InnoDB cluster usage. A report is

displayed which shows the settings required by

InnoDB cluster

. If the instance does not require any changes to its settings

you can use it in an InnoDB cluster, and can proceed to

Creating the Cluster. If the instance's settings

are not valid for InnoDB cluster usage the

dba.configureInstance() command displays

the settings which require modification. Before configuring

the instance you are prompted to confirm the changes shown in

a table with the following information:

Variable- the invalid configuration variable.Current Value- the current value for the invalid configuration variable.Required Value- the required value for the configuration variable.

How you proceed depends on whether the instance supports

persisting settings, see

Persisting Settings.

When dba.configureInstance() is issued

against the MySQL instance which MySQL Shell is currently

running on, in other words the local instance, it attempts to

automatically configure the instance. When

dba.configureInstance() is issued against a

remote instance, if the instance supports persisting

configuration changes automatically, you can choose to do

this.

If a remote instance does not support persisting the changes

to configure it for InnoDB cluster usage, you have to

configure the instance locally. See

Configuring Instances with

dba.configureLocalInstance().

In general, a restart of the instance is not required after

dba.configureInstance() configures the

option file, but for some specific settings a restart might be

required. This information is shown in the report generated

after issuing dba.configureInstance(). If

the instance supports the

RESTART statement, MySQL Shell

can shutdown and then start the instance. This ensures that

the changes made to the instance's option file are detected by

mysqld. For more information see

RESTART.

After executing a RESTART

statement, the current connection to the instance is lost.

If auto-reconnect is enabled, the connection is

reestablished after the server restarts. Otherwise, the

connection must be reestablished manually.

The dba.configureInstance() method verifies

that a suitable user is available for cluster usage, which is

used for connections between members of the cluster, see

User Privileges. The

recommended way to add a suitable user is to use the

clusterAdmin option, which enables you to

configure the cluster user and password when calling the

operation. For example:

mysql-js> dba.configureInstance('root@ic-1:3306', \

{clusterAdmin: "'icadmin'@'ic-1%'"});

The interactive prompt requests the password required by the

specified user. This option should be used with a connection

based on a user which has the privileges to create users with

suitable privileges, in this example the root user is used.

The created clusterAdmin user is granted

the privileges to be able to administer the cluster. See

Configuring Users for InnoDB Cluster for more

information. The format of the user names accepted by the

clusterAdmin option follows the standard

MySQL account name format, see

Section 6.2.4, “Specifying Account Names”. In this procedure the name

icadmin is used, and that name is required

for further operations such as adding instances to the

cluster.

If you do not specify a user to administer the cluster, in interactive mode a wizard enables you to choose one of the following options:

enable remote connections for the root user

create a new user, the equivalent of specifying the

clusterAdminoptionno automatic configuration, in which case you need to manually create the user

The following example demonstrates the option to create a new user for cluster usage.

mysql-js> dba.configureLocalInstance('root@localhost:3306')

Please provide the password for 'root@localhost:3306':

Please specify the path to the MySQL configuration file: /etc/mysql/mysql.conf.d/mysqld.cnf

Validating instance...

The configuration has been updated but it is required to restart the server.

{

"config_errors": [

{

"action": "restart",

"current": "OFF",

"option": "enforce_gtid_consistency",

"required": "ON"

},

{

"action": "restart",

"current": "OFF",

"option": "gtid_mode",

"required": "ON"

},

{

"action": "restart",

"current": "0",

"option": "log_bin",

"required": "1"

},

{

"action": "restart",

"current": "0",

"option": "log_slave_updates",

"required": "ON"

},

{

"action": "restart",

"current": "FILE",

"option": "master_info_repository",

"required": "TABLE"

},

{

"action": "restart",

"current": "FILE",

"option": "relay_log_info_repository",

"required": "TABLE"

},

{

"action": "restart",

"current": "OFF",

"option": "transaction_write_set_extraction",

"required": "XXHASH64"

}

],

"errors": [],

"restart_required": true,

"status": "error"

}

mysql-js>

If the instance has

super_read_only=ON then you

might need to confirm that AdminAPI can set

super_read_only=OFF. See

Super Read-only and Instances for more

information.

Once you have prepared your instances, use the

dba.createCluster() function to create the

cluster, using the instance which MySQL Shell is connected to

as the seed instance for the cluster. The seed instance is

replicated to the other instances that you add to the cluster,

making them replicas of the seed instance. In this procedure

the ic-1 instance is used as the seed. When you issue

dba.createCluster(

MySQL Shell creates a classic MySQL protocol session to the server

instance connected to the MySQL Shell's current global

session. For example, to create a cluster called

name)testCluster and assign the returned

cluster to a variable called cluster:

mysql-js> var cluster = dba.createCluster('testCluster')

Validating instance at icadmin@ic-1:3306...

This instance reports its own address as ic-1

Instance configuration is suitable.

Creating InnoDB cluster 'testCluster' on 'icadmin@ic-1:3306'...

Adding Seed Instance...

Cluster successfully created. Use Cluster.addInstance() to add MySQL instances.

At least 3 instances are needed for the cluster to be able to withstand up to

one server failure.

This pattern of assigning the returned cluster to a variable enables you to then execute further operations against the cluster using the Cluster object's methods. The returned Cluster object uses a new session, independent from the MySQL Shell's global session. This ensures that if you change the MySQL Shell global session, the Cluster object maintains its session to the instance.

The dba.createCluster() operation supports

MySQL Shell's interactive option. When

interactive is on, prompts appear in the

following situations:

when run on an instance that belongs to a cluster and the

adoptFromGroption is false, you are asked if you want to adopt an existing clusterwhen the

forceoption is not used (not set totrue), you are asked to confirm the creation of a multi-primary cluster

If you encounter an error related to metadata being inaccessible you might have the loopback network interface configured. For correct InnoDB cluster usage disable the loopback interface.

To check the cluster has been created, use the cluster

instance's status() function. See

Checking a cluster's Status with

Cluster.status()

Once server instances belong to a cluster it is important to

only administer them using MySQL Shell and

AdminAPI. Attempting to manually change the

configuration of Group Replication on an instance once it

has been added to a cluster is not supported. Similarly,

modifying server variables critical to InnoDB cluster,

such as server_uuid, after

an instance is configured using AdminAPI is not

supported.

When you create a cluster using MySQL Shell 8.0.14 and later,

you can set the timeout before instances are expelled from the

cluster, for example when they become unreachable. Pass the

expelTimeout option to the

dba.createCluster() operation, which

configures the

group_replication_member_expel_timeout

variable on the seed instance. The

expelTimeout option can take an integer

value in the range of 0 to 3600. All instances running MySQL

server 8.0.13 and later which are added to a cluster with

expelTimeout configured are automatically

configured to have the same expelTimeout

value as configured on the seed instance.

For information on the other options which you can pass to

dba.createCluster(), see

Section 21.5, “Working with InnoDB Cluster”.

Use the

Cluster.addInstance(instance)instance is connection information

to a configured instance, see

Configuring Production Instances. From

version 8.0.17, Group Replication implements compatibility

policies which consider the patch version of the instances,

and the

Cluster.addInstance()

You need a minimum of three instances in the cluster to make it tolerant to the failure of one instance. Adding further instances increases the tolerance to failure of an instance. To add an instance to the cluster issue:

mysql-js> cluster.addInstance('icadmin@ic-2:3306')

A new instance will be added to the InnoDB cluster. Depending on the amount of

data on the cluster this might take from a few seconds to several hours.

Please provide the password for 'icadmin@ic-2:3306': ********

Adding instance to the cluster ...

Validating instance at ic-2:3306...

This instance reports its own address as ic-2

Instance configuration is suitable.

The instance 'icadmin@ic-2:3306' was successfully added to the cluster.

If you are using MySQL 8.0.17 or later you can choose how the instance recovers the transactions it requires to synchronize with the cluster. Only when the joining instance has recovered all of the transactions previously processed by the cluster can it then join as an online instance and begin processing transactions. For more information, see Section 21.2.5, “Using MySQL Clone with InnoDB cluster”.

Also in 8.0.17 and later, you can configure how

Cluster.addInstance()

Depending on which option you chose to recover the instance from the cluster, you see different output in MySQL Shell. Suppose that you are adding the instance ic-2 to the cluster, and ic-1 is the seed or donor.

When you use MySQL Clone to recover an instance from the cluster, the output looks like:

Validating instance at ic-2:3306... This instance reports its own address as ic-2:3306 Instance configuration is suitable. A new instance will be added to the InnoDB cluster. Depending on the amount of data on the cluster this might take from a few seconds to several hours. Adding instance to the cluster... Monitoring recovery process of the new cluster member. Press ^C to stop monitoring and let it continue in background. Clone based state recovery is now in progress. NOTE: A server restart is expected to happen as part of the clone process. If the server does not support the RESTART command or does not come back after a while, you may need to manually start it back. * Waiting for clone to finish... NOTE: ic-2:3306 is being cloned from ic-1:3306 ** Stage DROP DATA: Completed ** Clone Transfer FILE COPY ############################################################ 100% Completed PAGE COPY ############################################################ 100% Completed REDO COPY ############################################################ 100% Completed NOTE: ic-2:3306 is shutting down... * Waiting for server restart... ready * ic-2:3306 has restarted, waiting for clone to finish... ** Stage RESTART: Completed * Clone process has finished: 2.18 GB transferred in 7 sec (311.26 MB/s) State recovery already finished for 'ic-2:3306' The instance 'ic-2:3306' was successfully added to the cluster.The warnings about server restart should be observed, you might have to manually restart an instance. See Section 13.7.8.8, “RESTART Statement”.

When you use incremental recovery to recover an instance from the cluster, the output looks like:

Incremental distributed state recovery is now in progress. * Waiting for incremental recovery to finish... NOTE: 'ic-2:3306' is being recovered from 'ic-1:3306' * Distributed recovery has finished

To cancel the monitoring of the recovery phase, issue

CONTROL+C. This stops the monitoring but the

recovery process continues in the background. The

waitRecovery integer option can be used

with the

Cluster.addInstance()

0: do not wait and let the recovery process finish in the background;

1: wait for the recovery process to finish;

2: wait for the recovery process to finish; and show detailed static progress information;

3: wait for the recovery process to finish; and show detailed dynamic progress information (progress bars);

By default, if the standard output which MySQL Shell is

running on refers to a terminal, the

waitRecovery option defaults to 3.

Otherwise, it defaults to 2. See

Monitoring Recovery Operations.

To verify the instance has been added, use the cluster

instance's status() function. For

example this is the status output of a sandbox cluster after

adding a second instance:

mysql-js> cluster.status()

{

"clusterName": "testCluster",

"defaultReplicaSet": {

"name": "default",

"primary": "ic-1:3306",

"ssl": "REQUIRED",

"status": "OK_NO_TOLERANCE",

"statusText": "Cluster is NOT tolerant to any failures.",

"topology": {

"ic-1:3306": {

"address": "ic-1:3306",

"mode": "R/W",

"readReplicas": {},

"role": "HA",

"status": "ONLINE"

},

"ic-2:3306": {

"address": "ic-2:3306",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "ONLINE"

}

}

},

"groupInformationSourceMember": "mysql://icadmin@ic-1:3306"

}

How you proceed depends on whether the instance is local or

remote to the instance MySQL Shell is running on, and whether

the instance supports persisting configuration changes

automatically, see

Persisting Settings. If

the instance supports persisting configuration changes

automatically, you do not need to persist the settings

manually and can either add more instances or continue to the

next step. If the instance does not support persisting

configuration changes automatically, you have to configure the

instance locally. See

Configuring Instances with

dba.configureLocalInstance(). This is

essential to ensure that instances rejoin the cluster in the

event of leaving the cluster.

If the instance has

super_read_only=ON then you

might need to confirm that AdminAPI can set

super_read_only=OFF. See

Super Read-only and Instances for more

information.

Once you have your cluster deployed you can configure MySQL Router to provide high availability, see Section 21.4, “Using MySQL Router with InnoDB Cluster”.

In MySQL 8.0.17, InnoDB cluster integrates the MySQL Clone plugin to provide automatic provisioning of joining instances. The process of retrieving the cluster's data so that the instance can synchronize with the cluster is called distributed recovery. When an instance needs to recover a cluster's transactions we distinguish between the donor, which is the cluster instance that provides the data, and the receiver, which is the instance that receives the data from the donor. In previous versions, Group Replication provided only asynchronous replication to recover the transactions required for the joining instance to synchronize with the cluster so that it could join the cluster. For a cluster with a large amount of previously processed transactions it could take a long time for the new instance to recover all of the transactions before being able to join the cluster. Or a cluster which had purged GTIDs, for example as part of regular maintenance, could be missing some of the transactions required to recover the new instance. In such cases the only alternative was to manually provision the instance using tools such as MySQL Enterprise Backup, as shown in Section 18.4.6, “Using MySQL Enterprise Backup with Group Replication”.

MySQL Clone provides an alternative way for an instance to recover the transactions required to synchronize with a cluster. Instead of relying on asynchronous replication to recover the transactions, MySQL Clone takes a snapshot of the data on the donor instance and then transfers the snapshot to the receiver.

All previous data in the receiver is destroyed during a clone operation. All MySQL settings not stored in tables are however maintained.

Once a clone operation has transferred the snapshot to the receiver, if the cluster has processed transactions while the snapshot was being transferred, asynchronous replication is used to recover any required data for the receiver to be synchronized with the cluster. This can be much more efficient than the instance recovering all of the transactions using asynchronous replication, and avoids any issues caused by purged GTIDs, enabling you to quickly provision new instances for InnoDB cluster. For more information, see Section 5.6.7, “The Clone Plugin” and Section 18.4.3.1, “Cloning for Distributed Recovery”

In contrast to using MySQL Clone, incremental recovery is the process where an instance joining a cluster uses only asynchronous replication to recover an instance from the cluster. When an InnoDB cluster is configured to use MySQL Clone, instances which join the cluster use either MySQL Clone or incremental recovery to recover the cluster's transactions. By default, the cluster automatically chooses the most suitable method, but you can optionally configure this behavior, for example to force cloning, which replaces any transactions already processed by the joining instance. When you are using MySQL Shell in interactive mode, the default, if the cluster is not sure it can proceed with recovery it provides an interactive prompt. This section describes the different options you are offered, and the different scenarios which influence which of the options you can choose.

In addition, the output of

Cluster.status()RECOVERING state includes

recovery progress information to enable you to easily monitor

recovery operations, whether they are using MySQL Clone or

incremental recovery. InnoDB cluster provides additional

information about instances using MySQL Clone in the output of

Cluster.status()

A InnoDB cluster that uses MySQL Clone provides the following additional behavior.

From version 8.0.17, by default when a new cluster is

created on an instance where the MySQL Clone plugin is

available then it is automatically installed and the cluster

is configured to support cloning. The InnoDB cluster

recovery accounts are created with the required

BACKUP_ADMIN privilege.

Set the disableClone Boolean option to

true to disable MySQL Clone for the

cluster. In this case a metadata entry is added for this

configuration and the MySQL Clone plugin is uninstalled if

it is installed. You can set the

disableClone option when you issue

dba.createCluster(), or at any time when

the cluster is running using

Cluster.setOption()

MySQL Clone can be used for a joining

instance if the new instance is

running MySQL 8.0.17 or later, and there is at least one

donor in the cluster (included in the

group_replication_group_seeds

list) running MySQL 8.0.17 or later. A cluster using MySQL

Clone follows the behavior documented at

Adding Instances to a Cluster, with the addition

of a possible choice of how to transfer the data required to

recover the instance from the cluster. How

Cluster.addInstance(instance)

Whether MySQL Clone is supported.

Whether incremental recovery is possible or not, which depends on the availability of binary logs. For example, if a donor instance has all binary logs required (

GTID_PURGEDis empty) then incremental recovery is possible. If no cluster instance has all binary logs required then incremental recovery is not possible.Whether incremental recovery is appropriate or not. Even though incremental recovery might be possible, because it has the potential to clash with data already on the instance, the GTID sets on the donor and receiver are checked to make sure that incremental recovery is appropriate. The following are possible results of the comparison:

New: the receiver has an empty

GTID_EXECUTEDGTID setIdentical: the receiver has a GTID set identical to the donor’s GTID set

Recoverable: the receiver has a GTID set that is missing transactions but these can be recovered from the donor

Irrecoverable: the donor has a GTID set that is missing transactions, possibly they have been purged

Diverged: the GTID sets of the donor and receiver have diverged

When the result of the comparison is determined to be Identical or Recoverable, incremental recovery is considered appropriate. When the result of the comparison is determined to be Irrecoverable or Diverged, incremental recovery is not considered appropriate.

For an instance considered New, incremental recovery cannot be considered appropriate because it is impossible to determine if the binary logs have been purged, or even if the

GTID_PURGEDandGTID_EXECUTEDvariables were reset. Alternatively, it could be that the server had already processed transactions before binary logs and GTIDs were enabled. Therefore in interactive mode, you have to confirm that you want to use incremental recovery.The state of the

gtidSetIsCompleteoption. If you are sure a cluster has been created with a complete GTID set, and therefore instances with empty GTID sets can be added to it without extra confirmations, set the cluster levelgtidSetIsCompleteBoolean option totrue.WarningSetting the

gtidSetIsCompleteoption totruemeans that joining servers are recovered regardless of any data they contain, use with caution. If you try to add an instance which has applied transactions you risk data corruption.

The combination of these factors influence how instances

join the cluster when you issue

Cluster.addInstance()recoveryMethod option is set to

auto by default, which means that in

MySQL Shell's interactive mode, the cluster selects the

best way to recover the instance from the cluster, and the

prompts advise you how to proceed. In other words the

cluster recommends using MySQL Clone or incremental recovery

based on the best approach and what the server supports. If

you are not using interactive mode and are scripting

MySQL Shell, you must set recoveryMethod

to the type of recovery you want to use - either

clone or incremental.

This section explains the different possible scenarios.

When you are using MySQL Shell in interactive mode, the main prompt with all of the possible options for adding the instance is:

Please select a recovery method [C]lone/[I]ncremental recovery/[A]bort (default Clone):

Depending on the factors mentioned, you might not be offered all of these options. The scenarios described later in this section explain which options you are offered. The options offered by this prompt are:

Clone: choose this option to clone the donor to the instance which you are adding to the cluster, deleting any transactions the instance contains. The MySQL Clone plugin is automatically installed. The InnoDB cluster recovery accounts are created with the required

BACKUP_ADMINprivilege. Assuming you are adding an instance which is either empty (has not processed any transactions) or which contains transactions you do not want to retain, select the Clone option. The cluster then uses MySQL Clone to completely overwrite the joining instance with a snapshot from an donor cluster member. To use this method by default and disable this prompt, set the cluster'srecoveryMethodoption toclone.Incremental recovery choose this option to use incremental recovery to recover all transactions processed by the cluster to the joining instance using asynchronous replication. Incremental recovery is appropriate if you are sure all updates ever processed by the cluster were done with GTIDs enabled, there are no purged transactions and the new instance contains the same GTID set as the cluster or a subset of it. To use this method by default, set the

recoveryMethodoption toincremental.

The combination of factors mentioned influences which of these options is available at the prompt as follows:

If the

group_replication_clone_threshold

system variable has been manually changed outside of

AdminAPI, then the cluster might decide to use

Clone recovery instead of following these scenarios.

In a scenario where

incremental recovery is possible

incremental recovery is not appropriate

Clone is supported

you can choose between any of the options. It is recommended that you use MySQL Clone, the default.

In a scenario where

incremental recovery is possible

incremental recovery is appropriate

you are not provided with the prompt, and incremental recovery is used.

In a scenario where

incremental recovery is possible

incremental recovery is not appropriate

Clone is not supported or is disabled

you cannot use MySQL Clone to add the instance to the cluster. You are provided with the prompt, and the recommended option is to proceed with incremental recovery.

In a scenario where

incremental recovery is not possible

Clone is not supported or is disabled

you cannot add the instance to the cluster and an ERROR: The target instance must be either cloned or fully provisioned before it can be added to the target cluster. Cluster.addInstance: Instance provisioning required (RuntimeError) is shown. This could be the result of binary logs being purged from all cluster instances. It is recommended to use MySQL Clone, by either upgrading the cluster or setting the

disableCloneoption tofalse.In a scenario where

incremental recovery is not possible

Clone is supported

you can only use MySQL Clone to add the instance to the cluster. This could be the result of the cluster missing binary logs, for example when they have been purged.

Once you select an option from the prompt, by default the progress of the instance recovering the transactions from the cluster is displayed. This monitoring enables you to check the recovery phase is working and also how long it should take for the instance to join the cluster and come online. To cancel the monitoring of the recovery phase, issue CONTROL+C.

When the

Cluster.checkInstanceState()disableClone is

false) the operation provides a warning

that the Clone can be used. For example:

The cluster transactions cannot be recovered on the instance, however,

Clone is available and can be used when adding it to a cluster.

{

"reason": "all_purged",

"state": "warning"

}Similarly, on an instance where Clone is either not available or has been disabled and the binary logs are not available, for example because they were purged, then the output includes:

The cluster transactions cannot be recovered on the instance.

{

"reason": "all_purged",

"state": "warning"

}

This section explains how to set up a sandbox InnoDB cluster deployment. You create and administer your InnoDB clusters using MySQL Shell with the included AdminAPI. This section assumes familiarity with MySQL Shell, see MySQL Shell 8.0 (part of MySQL 8.0) for further information.

Initially deploying and using local sandbox instances of MySQL is a good way to start your exploration of InnoDB cluster. You can fully test out InnoDB cluster locally, prior to deployment on your production servers. MySQL Shell has built-in functionality for creating sandbox instances that are correctly configured to work with Group Replication in a locally deployed scenario.

Sandbox instances are only suitable for deploying and running on your local machine for testing purposes. In a production environment the MySQL Server instances are deployed to various host machines on the network. See Section 21.2.4, “Production Deployment of InnoDB Cluster” for more information.

This tutorial shows how to use MySQL Shell to create an InnoDB cluster consisting of three MySQL server instances. It consists of the following steps:

MySQL Shell includes the AdminAPI that adds the

dba global variable, which provides

functions for administration of sandbox instances. In this

example setup, you create three sandbox instances using

dba.deploySandboxInstance().

Start MySQL Shell from a command prompt by issuing the command:

shell> mysqlsh

MySQL Shell provides two scripting language modes, JavaScript

and Python, in addition to a native SQL mode. Throughout this

guide MySQL Shell is used primarily in JavaScript mode

. When MySQL Shell starts it is in JavaScript mode by

default. Switch modes by issuing \js for

JavaScript mode, \py for Python mode, and

\sql for SQL mode. Ensure you are in

JavaScript mode by issuing the \js command,

then execute:

mysql-js> dba.deploySandboxInstance(3310)

Terminating commands with a semi-colon is not required in JavaScript and Python modes.

The argument passed to

deploySandboxInstance() is the TCP port

number where the MySQL Server instance listens for

connections. By default the sandbox is created in a directory

named

$HOME/mysql-sandboxes/

on Unix systems. For Microsoft Windows systems the directory

is

port%userprofile%\MySQL\mysql-sandboxes\.

port

The root user's password for the instance is prompted for.

Each sandbox instance uses the root user and password. This

is not recommended in production, see

Configuring Production Instances for

information about the clusterAdmin

option.

To deploy another sandbox server instance, repeat the steps followed for the sandbox instance at port 3310, choosing different port numbers for each instance. For each additional sandbox instance issue:

mysql-js> dba.deploySandboxInstance(port_number)

The clusterAdmin user name and password

must be the same on all instances that belong to a cluster.

In the case of a sandbox deployment, the root user name and

password must be the same on all instances.

To follow this tutorial, use port numbers 3310, 3320 and 3330 for the three sandbox server instances. Issue:

mysql-js>dba.deploySandboxInstance(mysql-js>3320)dba.deploySandboxInstance(3330)

To change the directory which sandboxes are stored in, for

example to run multiple sandboxes on one host for testing

purposes, use the MySQL Shell sandboxDir option. For example

to use a sandbox in the /home/user/sandbox1

directory, issue:

mysql-js> shell.options.sandboxDir='/home/user/sandbox1'

All subsequent sandbox related operations are then executed

against the instances found at

/home/user/sandbox1.

When you deploy sandboxes, MySQL Shell searches for the

mysqld binary which it then uses to create

the sandbox instance. You can configure where MySQL Shell

searches for the mysqld binary by

configuring the PATH environment variable.

This can be useful to test a new version of MySQL locally

before deploying it to production. For example, to use a

mysqld binary at the path

/home/user/mysql-latest/bin/mysqld issue:

PATH=/home/user/mysql-latest/bin/mysqld:$PATH

Then run MySQL Shell from the terminal where the

PATH environment variable is set. Any

sandboxes you deploy then use the mysqld

binary found at the configured path.

The next step is to create the InnoDB cluster while connected to the seed MySQL Server instance. The seed instance contains the data that you want to replicate to the other instances. In this example the sandbox instances are blank, therefore we can choose any instance. Sandboxes also use MySQL Clone, see Section 21.2.5, “Using MySQL Clone with InnoDB cluster”.

Connect MySQL Shell to the seed instance, in this case the one at port 3310:

mysql-js> \connect root@localhost:3310

The \connect MySQL Shell command is a

shortcut for the shell.connect() method:

mysql-js> shell.connect('root@localhost:3310')

Once you have connected, AdminAPI can write to the local instance's option file. This is different to working with a production deployment, where you would need to connect to the remote instance and run the MySQL Shell application locally on the instance before AdminAPI can write to the instance's option file.

Use the dba.createCluster() method to

create the InnoDB cluster with the currently connected

instance as the seed:

mysql-js> var cluster = dba.createCluster('testCluster')

The createCluster() method deploys the

InnoDB cluster metadata to the selected instance, and adds

the instance you are currently connected to as the seed

instance. The createCluster() method

returns the created cluster, in the example above this is

assigned to the cluster variable. The

parameter passed to the createCluster()

method is a symbolic name given to this InnoDB cluster, in

this case testCluster.

If the instance has

super_read_only=ON then you

might need to confirm that AdminAPI can set

super_read_only=OFF. See

Super Read-only and Instances for more

information.

The next step is to add more instances to the InnoDB cluster. Any transactions that were executed by the seed instance are re-executed by each secondary instance as it is added. This tutorial uses the sandbox instances that were created earlier at ports 3320 and 3330.

The seed instance in this example was recently created, so it is nearly empty. Therefore, there is little data that needs to be replicated from the seed instance to the secondary instances. In a production environment, where you have an existing database on the seed instance, you could use a tool such as MySQL Enterprise Backup to ensure that the secondaries have matching data before replication starts. This avoids the possibility of lengthy delays while data replicates from the primary to the secondaries. See Section 18.4.6, “Using MySQL Enterprise Backup with Group Replication”.

Add the second instance to the InnoDB cluster:

mysql-js> cluster.addInstance('root@localhost:3320')

The root user's password is prompted for. The specified instance is recovered from the seed instance. In other words the transactions from the seed are copied to the instance joining the cluster.

Add the third instance in the same way:

mysql-js> cluster.addInstance('root@localhost:3330')

The root user's password is prompted for.

At this point you have created a cluster with three instances: a primary, and two secondaries.

You can only specify localhost in

addInstance() if the instance is a

sandbox instance. This also applies to the implicit

addInstance() after issuing

createCluster().

Once the sandbox instances have been added to the cluster, the

configuration required for InnoDB cluster must be persisted

to each of the instance's option files. How you proceed

depends on whether the instance supports persisting

configuration changes automatically, see

Persisting Settings.

When the MySQL instance which you are using supports

persisting configuration changes automatically, adding the

instance automatically configures the instance. When the MySQL

instance which you are using does not support persisting

configuration changes automatically, you have to configure the

instance locally. See

Configuring Instances with

dba.configureLocalInstance().

To check the cluster has been created, use the cluster

instance's status() function. See

Checking a cluster's Status with

Cluster.status()

Once you have your cluster deployed you can configure MySQL Router to provide high availability, see Section 21.4, “Using MySQL Router with InnoDB Cluster”.

If you have an existing deployment of Group Replication and you

want to use it to create a cluster, pass the

adoptFromGR option to the

dba.createCluster() function. The created

InnoDB cluster matches whether the replication group is

running as single-primary or multi-primary.

To adopt an existing Group Replication group, connect to a group

member using MySQL Shell. In the following example a

single-primary group is adopted. We connect to

gr-member-2, a secondary instance, while

gr-member-1 is functioning as the group's

primary. Create a cluster using

dba.createCluster(), passing in the

adoptFromGR option. For example:

mysql-js> var cluster = dba.createCluster('prodCluster', {adoptFromGR: true});

A new InnoDB cluster will be created on instance 'root@gr-member-2:3306'.

Creating InnoDB cluster 'prodCluster' on 'root@gr-member-2:3306'...

Adding Seed Instance...

Cluster successfully created. Use cluster.addInstance() to add MySQL instances.

At least 3 instances are needed for the cluster to be able to withstand up to

one server failure.

If the instance has

super_read_only=ON then you

might need to confirm that AdminAPI can set

super_read_only=OFF. See

Super Read-only and Instances for more

information.

The new cluster matches the mode of the group. If the adopted group was running in single-primary mode then a single-primary cluster is created. If the adopted group was running in multi-primary mode then a multi-primary cluster is created.

This section explains how to upgrade your cluster. Much of the process of upgrading an InnoDB cluster consists of upgrading the instances in the same way as documented at Section 18.7, “Upgrading Group Replication”. This section focuses on the additional considerations for upgrading InnoDB cluster. Before starting an upgrade, you can use the MySQL Shell Upgrade Checker Utility to verify instances are ready for the upgrade.

When upgrading the metadata schema of clusters deployed by MySQL Shell versions before 8.0.19, a rolling upgrade of existing MySQL Router instances is required. This process minimizes disruption to applications during the upgrade. The rolling upgrade process must be performed in the following order:

Run the latest MySQL Shell version, connect the global session to the cluster and issue

dba.upgradeMetadata(). The upgrade function stops if an outdated MySQL Router instance is detected, at which point you can stop the upgrade process in MySQL Shell to resume later.Upgrade any detected out of date MySQL Router instances to the latest version. It is recommended to use the same MySQL Router version as MySQL Shell version.

Continue or restart the

dba.upgradeMetadata()operation to complete the metadata upgrade.

As AdminAPI evolves, some releases might require you to upgrade the metadata of existing clusters to ensure they are compatible with newer versions of MySQL Shell. For example, the addition of InnoDB ReplicaSet in version 8.0.19 means that the metadata schema has been upgraded to version 2.0. Regardless of whether you plan to use InnoDB ReplicaSet or not, to use MySQL Shell 8.0.19 or later with a cluster deployed using an earlier version of MySQL Shell, you must upgrade the metadata of your cluster.

Without upgrading the metadata you cannot use MySQL Shell

8.0.19 to change the configuration of a cluster created with

earlier versions. For example, you can only perform read

operations against the cluster such as

Cluster.status()Cluster.describe()Cluster.options()

This dba.upgradeMetadata() operation compares

the version of the metadata schema found on the cluster

MySQL Shell is currently connected to with the version of the

metadata schema supported by this MySQL Shell version. If the

installed metadata version is lower, an upgrade process is

started. The dba.upgradeMetadata() operation

then upgrades any automatically created MySQL Router users to have

the correct privileges. Manually created MySQL Router users with a

name not starting with mysql_router_ are not

automatically upgraded. This is an important step in upgrading

your cluster, only then can the MySQL Router application be

upgraded. To get information on which of the MySQL Router instances

registered with a cluster require the metadata upgrade, issue:

cluster.listRouters({'onlyUpgradeRequired':'true'})

{

"clusterName": "mycluster",

"routers": {

"example.com::": {

"hostname": "example.com",

"lastCheckIn": "2019-11-26 10:10:37",

"roPort": 6447,

"roXPort": 64470,

"rwPort": 6446,

"rwXPort": 64460,

"version": "8.0.18"

}

}

}

A cluster which is using the new metadata cannot be administered by earlier MySQL Shell versions, for example once you upgrade to version 8.0.19 you can no longer use version 8.0.18 or earlier to administer the cluster.

To upgrade a cluster's metadata, connect MySQL Shell's global

session to your cluster and use the

dba.upgradeMetadata() operation to upgrade

the cluster's metadata to the new metadata. For example:

mysql-js>\connect user@example.com:3306mysql-js>dba.upgradeMetadata()InnoDB Cluster Metadata Upgrade The cluster you are connected to is using an outdated metadata schema version 1.0.1 and needs to be upgraded to 2.0.0. Without doing this upgrade, no AdminAPI calls except read only operations will be allowed. The grants for the MySQL Router accounts that were created automatically when bootstrapping need to be updated to match the new metadata version's requirements. Updating router accounts... NOTE: 2 router accounts have been updated. Upgrading metadata at 'example.com:3306' from version 1.0.1 to version 2.0.0. Creating backup of the metadata schema... Step 1 of 1: upgrading from 1.0.1 to 2.0.0... Removing metadata backup... Upgrade process successfully finished, metadata schema is now on version 2.0.0

If you encounter an error related to the

clusterAdmin user missing privileges, follow

the instructions for granting the correct privileges.

This section covers trouble shooting the upgrade process.

MySQL Shell uses the host value of the provided connection

parameters as the target hostname used for AdminAPI

operations, namely to register the instance in the metadata

(for the dba.createCluster() and

Cluster.addInstance()hostname that is used or

reported by Group Replication, which uses the value of the

report_host system variable

when it is defined (in other words it is not

NULL), otherwise the value of

hostname is used. Therefore,

AdminAPI now follows the same logic to register the target

instance in the metadata and as the default value for the

group_replication_local_address

variable on instances, instead of using the host value from

the instance connection parameters. When the

report_host variable is set

to empty, Group Replication uses an empty value for the host

but AdminAPI (for example in commands such as

dba.checkInstanceConfiguration(),

dba.configureInstance(),

dba.createCluster(), and so on) reports the

hostname as the value used which is inconsistent with the

value reported by Group Replication. If an empty value is set

for the report_host system

variable, an error is generated. (Bug #28285389)

For a cluster created using a MySQL Shell version earlier than

8.0.16, an attempt to reboot the cluster from a complete

outage performed using version 8.0.16 or higher results in

this error. This is caused by a mismatch of the Metadata

values with the report_host

or hostname values reported

by the instances. The workaround is to:

Identify which of the instances is the “seed”, in other words the one with the most recent GTID set. The

dba.rebootClusterFromCompleteOutage()operation detects whether the instance is a seed and the operation generates an error if the current session is not connected to the most up-to-date instance.Set the

report_hostsystem variable to the value that is stored in the Metadata schema for the target instance. This value is thehostname:portpair used in the instance definition upon cluster creation. The value can be consulted by querying themysql_innodb_cluster_metadata.instancestable.For example, suppose a cluster was created using the following sequence of commands:

mysql-js>

\c clusterAdmin@localhost:3306mysql-js>dba.createCluster("myCluster")Therefore the hostname value stored in the metadata is “localhost” and for that reason,

report_hostmust be set to “localhost” on the seed.Reboot the cluster using only the seed instance. At the interactive prompts do not add the remaining instances to the cluster.

Use

Cluster.rescan()Remove the seed instance from the cluster

Stop mysqld on the seed instance and either remove the forced

report_hostsetting (step 2), or replace it with the value previously stored in the Metadata value.Restart the seed instance and add it back to the cluster using

Cluster.addInstance()

This allows a smooth and complete upgrade of the cluster to

the latest MySQL Shell version. Another possibility, that

depends on the use-case, is to simply set the value of

report_host on all cluster

members to match what has been registered in the Metadata

schema upon cluster creation.

This section describes how to use MySQL Router with InnoDB cluster

to achieve high availability. Regardless of whether you have

deployed a sandbox or production cluster, MySQL Router can configure

itself based on the InnoDB cluster's metadata using the

--bootstrap option. This

configures MySQL Router automatically to route connections to the

cluster's server instances. Client applications connect to

the ports MySQL Router provides, without any need to be aware of the

InnoDB cluster topology. In the event of a unexpected failure,

the InnoDB cluster adjusts itself automatically and MySQL Router

detects the change. This removes the need for your client

application to handle failover. For more information, see

Routing for MySQL InnoDB cluster.

Do not attempt to configure MySQL Router manually to redirect to the

ports of an InnoDB cluster. Always use the

--bootstrap option as this

ensures that MySQL Router takes its configuration from the

InnoDB cluster's metadata. See

Cluster Metadata and State.

The recommended deployment of MySQL Router is on the same host as the application. When using a sandbox deployment, everything is running on a single host, therefore you deploy MySQL Router to the same host. When using a production deployment, we recommend deploying one MySQL Router instance to each machine used to host one of your client applications. It is also possible to deploy MySQL Router to a common machine through which your application instances connect.

Assuming MySQL Router is already installed (see

Installing MySQL Router), use the

--bootstrap option to provide

the location of a server instance that belongs to the

InnoDB cluster. MySQL Router uses the included metadata cache plugin

to retrieve the InnoDB cluster's metadata, consisting of a

list of server instance addresses which make up the

InnoDB cluster and their role in the cluster. You pass the

URI-like connection string of the server that MySQL Router should

retrieve the InnoDB cluster metadata from. For example:

shell> mysqlrouter --bootstrap icadmin@ic-1:3306 --user=mysqlrouter

You are prompted for the instance password and encryption key for

MySQL Router to use. This encryption key is used to encrypt the

instance password used by MySQL Router to connect to the cluster. The

ports you can use to connect to the InnoDB cluster are also

displayed. The MySQL Router bootstrap process creates a

mysqlrouter.conf file, with the settings

based on the cluster metadata retrieved from the address passed to

the --bootstrap option, in the

above example icadmin@ic-1:3306. Based on the

InnoDB cluster metadata retrieved, MySQL Router automatically

configures the mysqlrouter.conf file,

including a metadata_cache section. If you are

using MySQL Router 8.0.14 and later, the

--bootstrap option

automatically configures MySQL Router to track and store active MySQL

InnoDB cluster Metadata server addresses at the path configured

by dynamic_state. This ensures

that when MySQL Router is restarted it knows which MySQL

InnoDB cluster Metadata server addresses are current. For more

information see the

dynamic_state documentation.

In earlier MySQL Router versions, metadata server information was

defined during Router's initial bootstrap operation and stored

statically as

bootstrap_server_addresses in

the configuration file, which contained the addresses for all

server instances in the cluster. For example:

[metadata_cache:prodCluster] router_id=1 bootstrap_server_addresses=mysql://icadmin@ic-1:3306,mysql://icadmin@ic-2:3306,mysql://icadmin@ic-3:3306 user=mysql_router1_jy95yozko3k2 metadata_cluster=prodCluster ttl=300

If using MySQL Router 8.0.13 or earlier, when you change the

topology of a cluster by adding another server instance after

you have bootstrapped MySQL Router, you need to update

bootstrap_server_addresses

based on the updated metadata. Either restart MySQL Router using the

--bootstrap option, or

manually edit the

bootstrap_server_addresses

section of the mysqlrouter.conf file and

restart MySQL Router.

The generated MySQL Router configuration creates TCP ports which you use to connect to the cluster. By default, ports for communicating with the cluster using both classic MySQL protocol and X Protocol are created. To use X Protocol the server instances must have X Plugin installed and configured, which is the default for MySQL 8.0 and later. The default available TCP ports are: